In this series of posts, we first described the data privacy issue and privacy tech, and then why many businesses cannot migrate to the cloud. In this post, we’ll tackle the privacy issue from the point of view of hardware.

Privacy tech has given us solutions to safely transfer and store data, but is still working on an ideal way to process data. As will be described, the real-time data processing challenge can only be accomplished using hardware.

Trusted Execution Environment

One way to process data securely is to move sensitive data into a secure compartment where operations are performed on the data, which is known as a Trusted Execution Environment (TEE). TEEs are included as sub-blocks of some CPUs, such as Apple’s Secure Enclave (found in any iPhone 5s or newer) or Intel’s SGX. CPUs with this feature can offload sensitive data processing to the TEE.

You can think of this technology as storing your cash in your wallet versus putting it in a safe – both can be stolen, but it’s harder to steal money from a safe.

While TEEs can provide a higher level of security, they do not have the throughput to process large amounts of data, and are still inherently not secure since they decrypt the data before processing.

Why Hardware?

Some of the challenges in privacy tech have been solved with improvements in software, such as new algorithms for encryption. More complex problems, such as real time encrypted data processing, can only be solved using specialized hardware.

When we say software, we mean programs that run on a CPU (Central Processing Unit). CPUs are general purpose processors meant to handle a wide variety of applications, which means that for specific applications, such as graphics, they may not provide enough compute power. This situation led to the creation of specialized chips, called GPUs (Graphics Processing Unit). Since some of the needs for encryption overlap with the needs for graphics, GPUs have also been used to accelerate tasks such as Bitcoin mining.

When it comes to Privacy Enhancing Technologies (PETs), such as fully homomorphic encryption (FHE), we need more specialized chips. GPUs just weren’t built to handle the operations used in these complex cryptographic algorithms.

ASICs to the Rescue

Application Specific Integrated Circuits (ASICs) are chips that implement a specific task or set of tasks and are optimized for the performance and efficiency needs of those tasks.

To achieve significant acceleration of PETs, such as FHE and ZKP, we need an architecture customized for these specific algorithms, one that is optimized to run mathematical operations, such as NTTs (Number Theoretic Transformations) and modular multiplication, that are the building blocks of FHE/ZKP.

ASICs are the ideal technology for a hardware implementation of FHE/ZKP, since only ASICs can provide the performance and efficiency needed to meet this challenge.

Imagine the Future

If we could create an ASIC-based solution for PETs, what would the future look like? We could make the public cloud secure, make it a trusted environment with massive acceleration and energy efficiency.

Technology that meets these needs will revolutionize access to data for the biggest global industries.

Example 1: Age Verification

Today, when you try and enter a club or buy alcohol, you present your driver’s license, freely giving the salesperson all your information – name, age, address, etc.

In the future, you will use a ZKP to verify that you are over the minimum age, without revealing any personal information.

Example 2: Reverse Time Migration

Today, the oil and gas industry uses a method called Reverse Time Migration (RTM) to produce high-resolution images of subsurface rock structures. It is a complex process that can help identify potential oil and gas reserves, and can eliminate the need for fracking. RTM is a computationally intensive process and requires a significant amount of computing power.

The data collected by RTM is so sensitive that companies would rather buy supercomputers to run RTM than risk exposing data to competitors if stored online.

In the future, this industry can run RTM on FHE data in the cloud on the most powerful servers available, saving them huge costs in infrastructure as well as providing better results.

Example 3: Cancer Research

Today, one of the factors that slows research into a cure for cancer is the lack of sufficient data. Researchers can only use data that patients consent to providing, such as with a clinical trial, medical records, or other voluntary platforms.

In the future, medical data insights can be gained using FHE on encrypted patient data. Imagine if researchers had access to every case of cancer in a whole country? Or even around the whole world? Access to a dataset of this size will significantly reduce the research time and dramatically advance cures for cancer.

And it’s not only for cancer, but for all diseases.

Wrap-up

Privacy is the biggest problem facing businesses and governments – and they are increasingly turning to privacy tech for the answer. But, privacy tech is only a viable solution when it’s accelerated by specialized hardware (ASICs), which provide unparalleled performance, protection, and energy efficiency, that can run cutting-edge technologies like FHE and ZKP in real-time to safeguard sensitive data.

Businesses and governments can only modernize their compute infrastructure, and break the boundaries of science, medicine, healthcare, and space exploration, when the cloud becomes a trusted environment.

This is the Holy Grail of Cloud Computing.

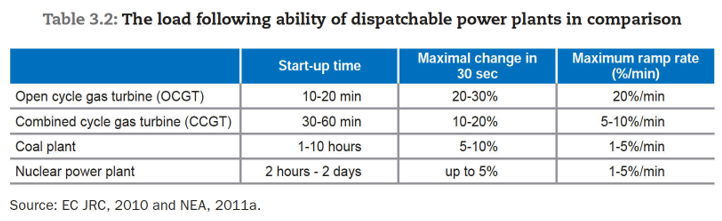

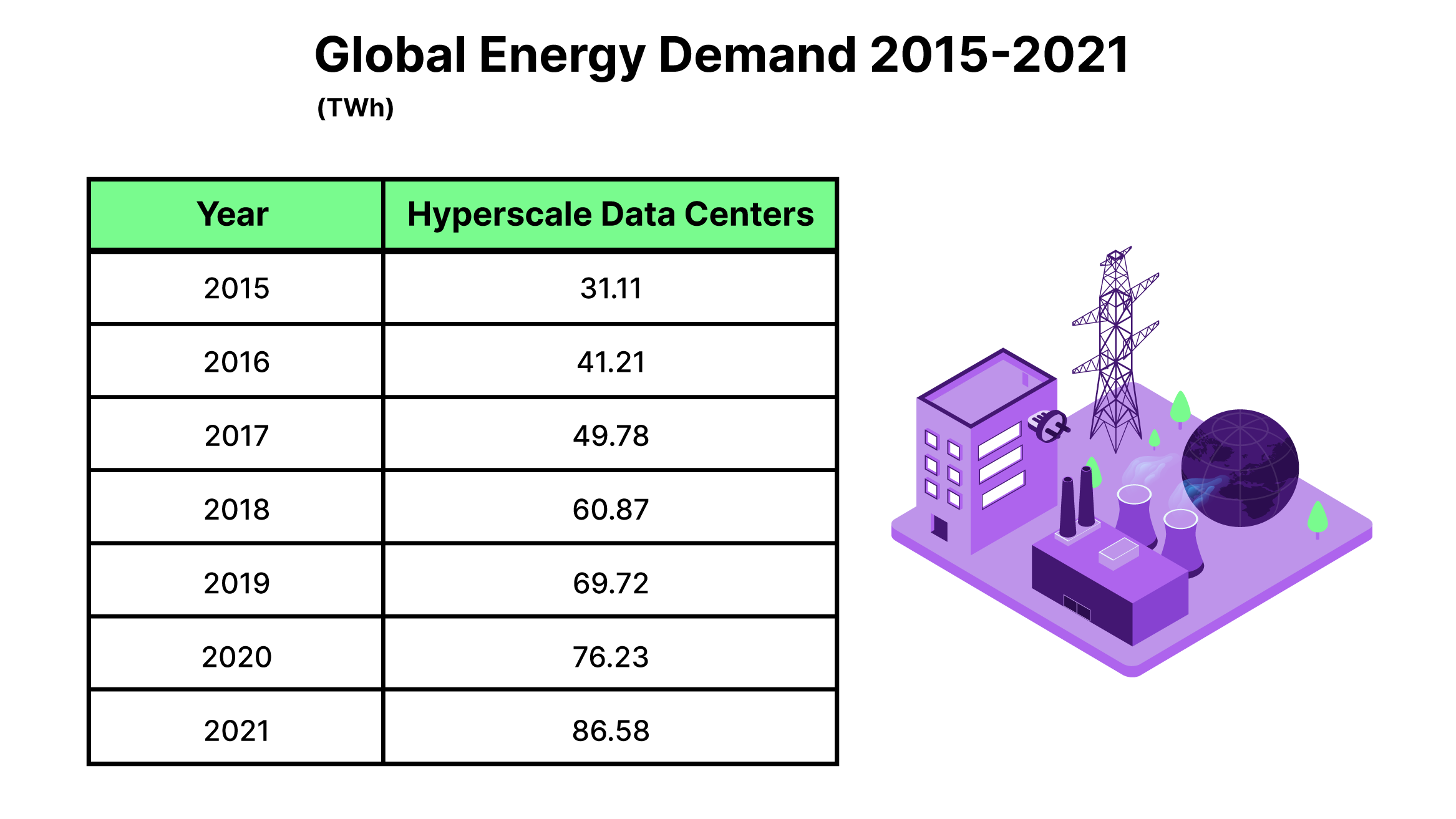

Figure 1: Global Energy Demand

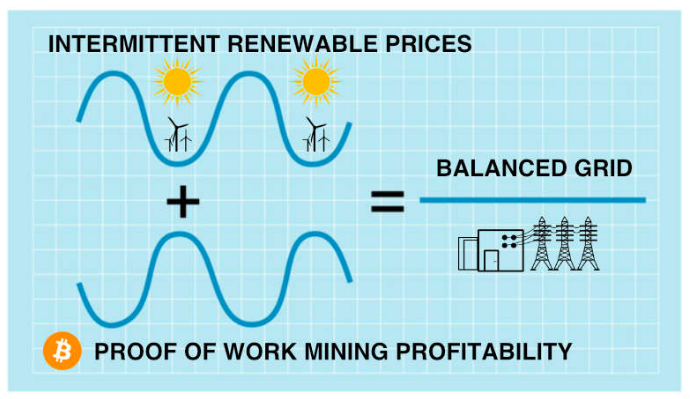

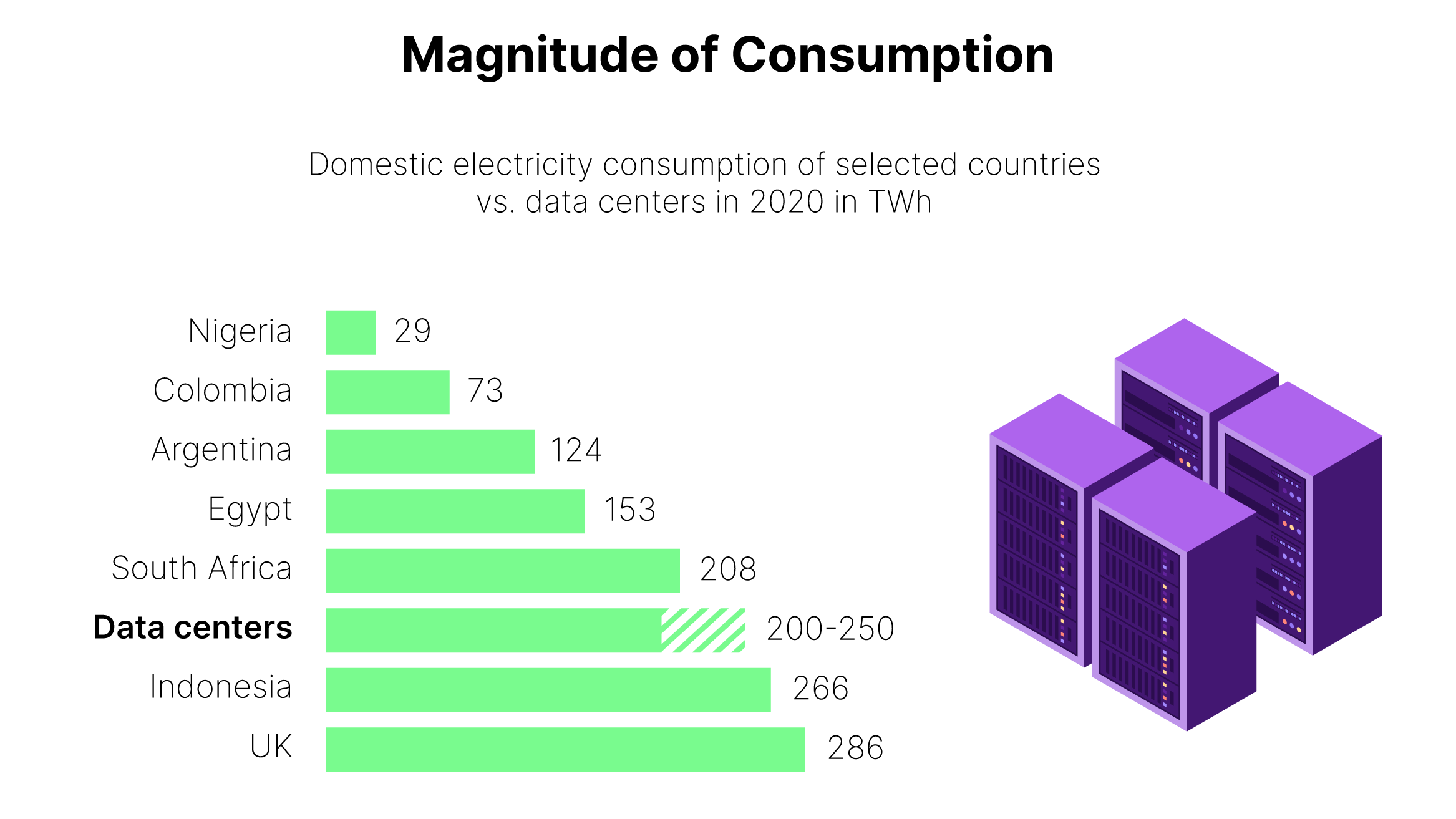

Figure 1: Global Energy Demand Figure 2: Magnitude of Consumption

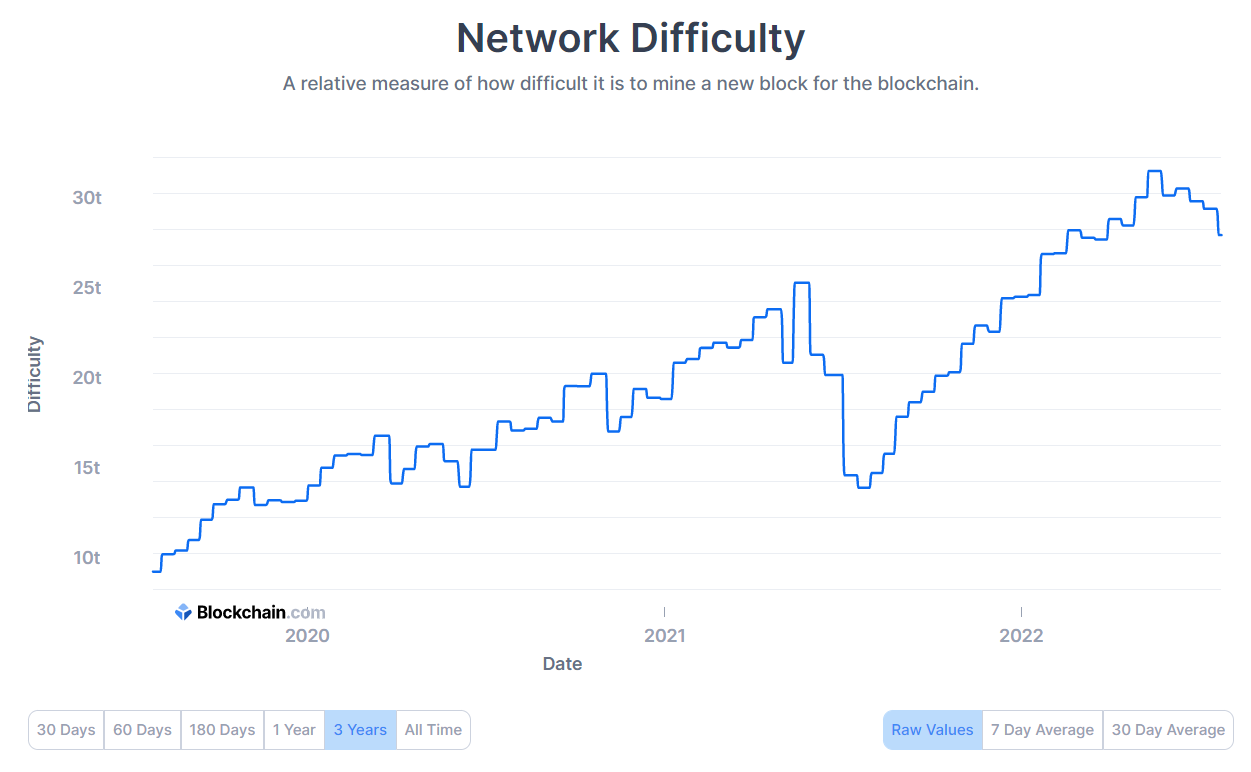

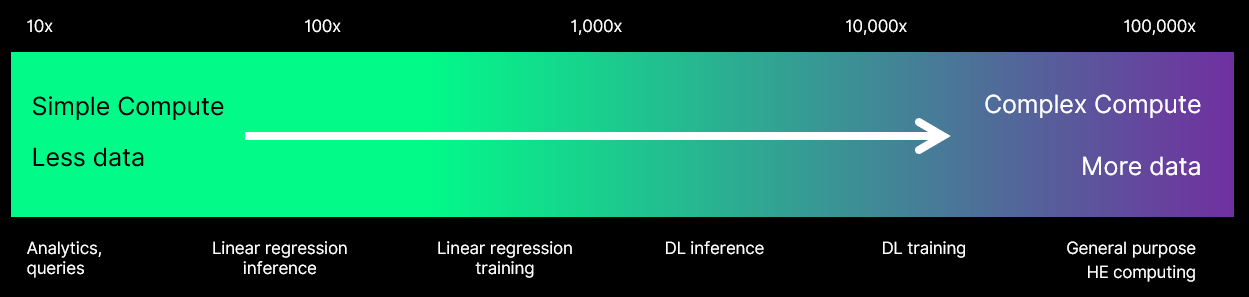

Figure 2: Magnitude of Consumption Figure 3: Compute Acceleration

Figure 3: Compute Acceleration